Elasticsearch: Bulk ingest data

Often you think of a solution to a simple problem and once you come up with that solution you realise you need to apply this to a large dataset. In this post, I will explain how I deployed a simple solution to a larger dataset while preparing the system for future growth. Here’s the state of play before changes in my client’s eCommerce system:

Existing System:

- Login to aggregator’s portal to retrieve datafeed URI

- Login to customer admin interface to create or update merchant details

- Create cron job to pull data from partner URI after inital setup

- Cron job dumps data in MySQL

- Client shopping UI presents search field and filters to customers to search and use

- Search result is extremely slow (homepage: 2.03 s, search results page:35.73 s, product page:28.68 s ). Notice the search results and product pages are completely unacceptable

Proposed System:

Phase 1:

- Follow steps 1-4 of existing system

- Export MySQL data as csv

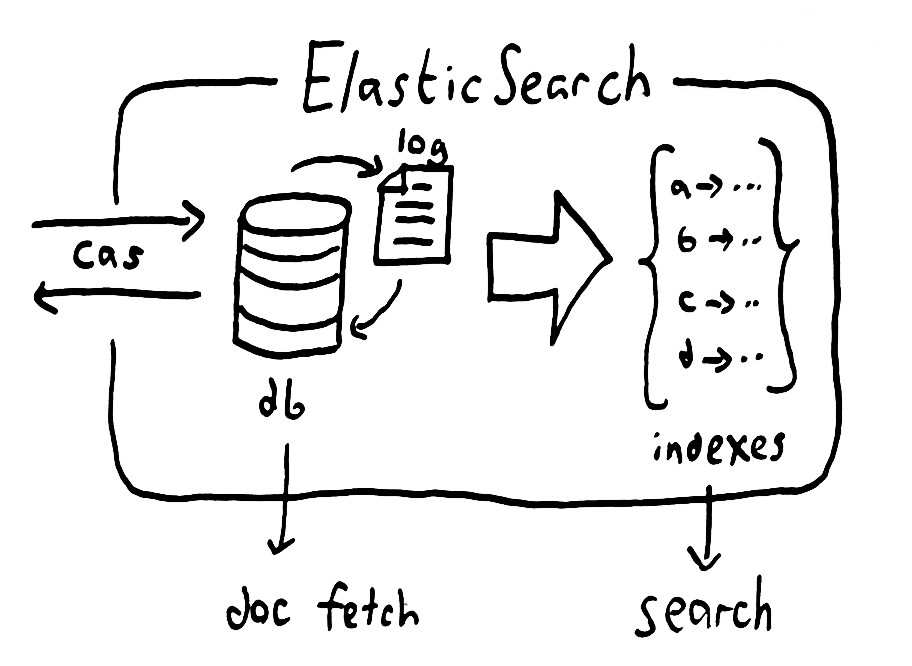

- Create an instance of Elasticsearch with an index to store product data

- Export MySQL data as CSV

- Create script that bulk insert the exported data into Elasticsearch

- On command line, search Elasticsearch instance using various product attributes (product name, type, category, size etc.). Check the time speed of search results.

Phase 2:

- Build a search interface that uses Elasticsearch

- Display search results with pagination

- Add filters to search results

- AB Test existing search interface and Elasticsearch based and compare conversion (actual sales)

- Switch on the best solution – Elasticsearch

A few libraries already exists that can solve some of these challenges e.g

- Elasticsearch-CSV – https://www.npmjs.com/package/elasticsearch-csv

- SearchKit – https://github.com/searchkit/searchkit

- PingDom – https://tools.pingdom.com

In the next post, I will dive deep into how I used Elasticsearch-CSV to quickly ingest merchant data and the response I got