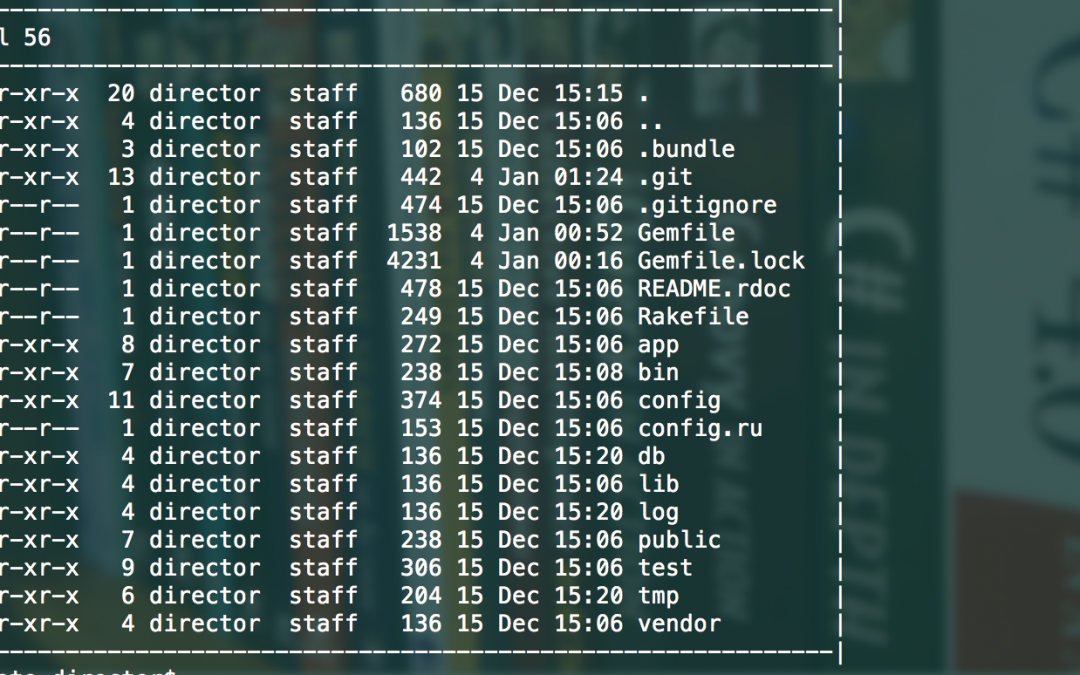

BigData and Agile seem not to be friendly in the past but that is no more the case. One of the important points in processes data is data integrity. Assuming you are pulling data from an API(Application Programming Interface) and performing some processing on the result before dumping as utf-8 gzipped csv files on Amazon’s S3. The task is to confirm that the files are properly encoded(UTF-8), each file has the appropriate headers, each row in each file do not have missing data and finally produce a report with filenames, column count, records count and encoding type. There are many languages today and we can use any BUT speed is of great importance. also, we want to have a Jenkins (Continuous Integration Server) job running.

I have decided to use Bash to perform these checks and will do it twice! First, I will use basic Bash commands and then will use the csvkit (http://csvkit.readthedocs.org/). The other tool in the mix is the AWS commandline tool(aws-cli)